I like to think of harmonic distortion as an annoying guy in the band that you can’t kick out, who is hell-bent on playing exactly the same thing you play but an octave higher. As annoying as that might be, you might prefer he stay on the octaves than play a perfect 5th on top of every note you play.

Simply stated, harmonic distortion is a harmonically related sound that is found at the output of a piece of audio equipment that was not part of the sound at the input, which means it must have been created by the equipment.

Suppose you had a piano that played pure tones, with each key producing a pure sine wave of a single frequency. (Obviously, real-world pianos produce a rich series of harmonics with each note, which is why they sound they way they do, but for the sake of simplicity let’s just suppose a pure-tone piano existed.)

If you were to strike the A key just below middle C, the pure-tone piano would produce a perfect 220 Hz sine wave. If you recorded that sound with a perfectly transparent microphone plugged into a perfectly linear mic preamp in a perfectly dead room, the captured waveform would contain just that single frequency, 220 Hz.

In the real world, with a less than perfect acoustic space, microphone, and mic preamp, a waveform analysis would reveal trace amounts of additional frequencies that were not produced by the pure-tone piano, and those frequencies would be multiples of the original frequency.

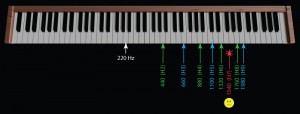

So with an A note of 220 Hz, the 2nd harmonic (H2) would be 2 x 220 Hz = 440 Hz, the 3rd harmonic (H3) would be 3 x 220 Hz = 660 Hz, etc.

The musical significance of these additional notes becomes clear when you look at them on a piano keyboard.

H2 is also an A note, but it’s one octave up, so no matter what happens it will always be musically compatible with the fundamental frequency. An octave can actually add some richness to music in the same way that a 12-string guitar has octave pairs of strings.

The other harmonics are as follows:

- H3 is an E

- H4 is an A

- H5 is a C#

- H6 is an E

- H7 isn’t even on the piano! It’s somewhere between an F# and a G

- H8 is another A

- H9 is a B

If all these notes were played at the same time you played the A, your nice A note would sound terrible, so it should be obvious why harmonic distortion is a problem and why engineers work so hard to try to get rid of it.

Unfortunately, as much as we try and get rid of these superfluous tones, we can never eliminate them completely, so it’s worth considering whether they are all equally annoying or whether some of them are more annoying than others, and whether or not any of them are actually desirable.

Beginner’s Luck

Long before transistors were commonplace, audio equipment relied on vacuum tubes for amplification, and often the tubes used for voltage gain were triodes. Harmonic distortion was a well understood concept at the time, and engineers measured it and produced specs to ensure their designs were performing acceptably. But what was considered “acceptable?” A gold standard was needed; a target maximum level of distortion that could be seen as negligible enough to be acceptable.

The scientists of the day set out to determine what this gold standard should be, and they did not take this responsibility lightly. Many experiments were done using human test subjects, and after much effort it was agreed that the maximum tolerable level should be 1%, and that any further reduction in distortion was barely detectable by the human ear.

Enter the Transistor

When the transistor arrived, tantalizing companies with the promise of reduced cost, reduced size, wider profit margins, improved efficiency etc., designers were all too happy to abandon the fragile, expensive glass bottle in favor of a new tiny shiny nugget of silicon, and they set out to design amplifiers to meet that same 1% THD+N specification. It didn’t take long before functional transistor amplifiers were developed that met the 1% target, but there was a big problem: they sounded terrible!

The problem with the early transistor amplifiers was not the total harmonic distortion, it was the ratio of the individual harmonics that made up the total. Triode-based tube amplifiers tend to have very little energy in the higher harmonics, so a tube amp with 1% THD exhibits mostly 2nd harmonic with very little of the other harmonics. A 2nd harmonic tone 40 dB below the fundamental (equating to 1%) is quite difficult for the human ear to detect. Early transistor amplifiers, on the other hand, were dominated by odd harmonics, primarily the 3rd and 5th, to which the human ear is significantly more sensitive.

A comparison of 2nd-harmonic vs. 3rd-harmonic distortion waveforms reveals a hint as to why the ear is more sensitive to one over the other. The following waveforms show 15% THD in both 2nd and 3rd harmonics, an exaggerated level to help show the effect:

Both of these theoretical amplifiers would have the exact same THD+N specification, but they would not sound the same, at all! Even though both waveforms look severely distorted, the top waveform still resembles a sine wave, while the bottom wave more closely resembles a square wave. Square waves tend to sound “sharp” or “buzzy” or “harsh,” and listening to them for prolonged periods of time will bring on ear-fatigue more rapidly than a sine wave.

It’s important to remember that harmonic distortion does not discriminate; every note of every chord will produce its own series of harmonics, and if the amplifier is dominated by 3rd and 5th harmonics, that’s a lot of extra notes ringing out that weren’t there to begin with!

So what became of the transistor amplifier? Engineers realized that in order to achieve comparable sound quality with an amplifier producing mostly 3rd and 5th harmonic distortion, the maximum allowable level of distortion had to be reduced by 10 – 20 dB, to .3% or even .1% THD+N.

New ways of expressing THD+N were developed that weighted the individual harmonics separately in order to provide a more accurate and fair comparison, but they were not widely adopted, for one main reason: if transistor amplifiers required a much more rigorous standard for THD+N in order for their sound quality to approach that of tube amplifiers, the companies making them wanted to be able to brag about this reduced distortion as an “improvement.” After all, .1% distortion appears to be a big improvement over 1% distortion, and it was just too tempting for the marketing guys to use this juicy spec to help sell transistor amplifiers!

Bollocks!

Thanks for the explanation!

Very well presented with nice examples!

[…] I like to think of harmonic distortion as an annoying guy in the band that you can't kick out, who is hell-bent on playing exactly the same thing you play but an octave higher. As annoying as that… […]

this was so educational. now understand more of Harmonic distortion. and what you can do with it or not do with it.